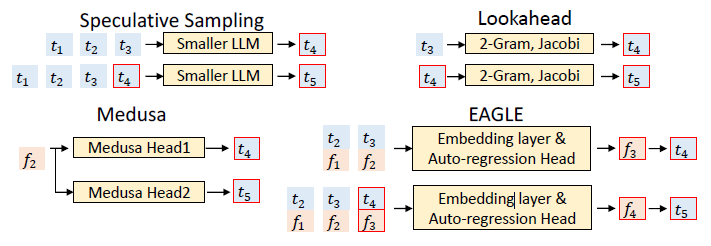

1. Introduction to Speculative Decoding

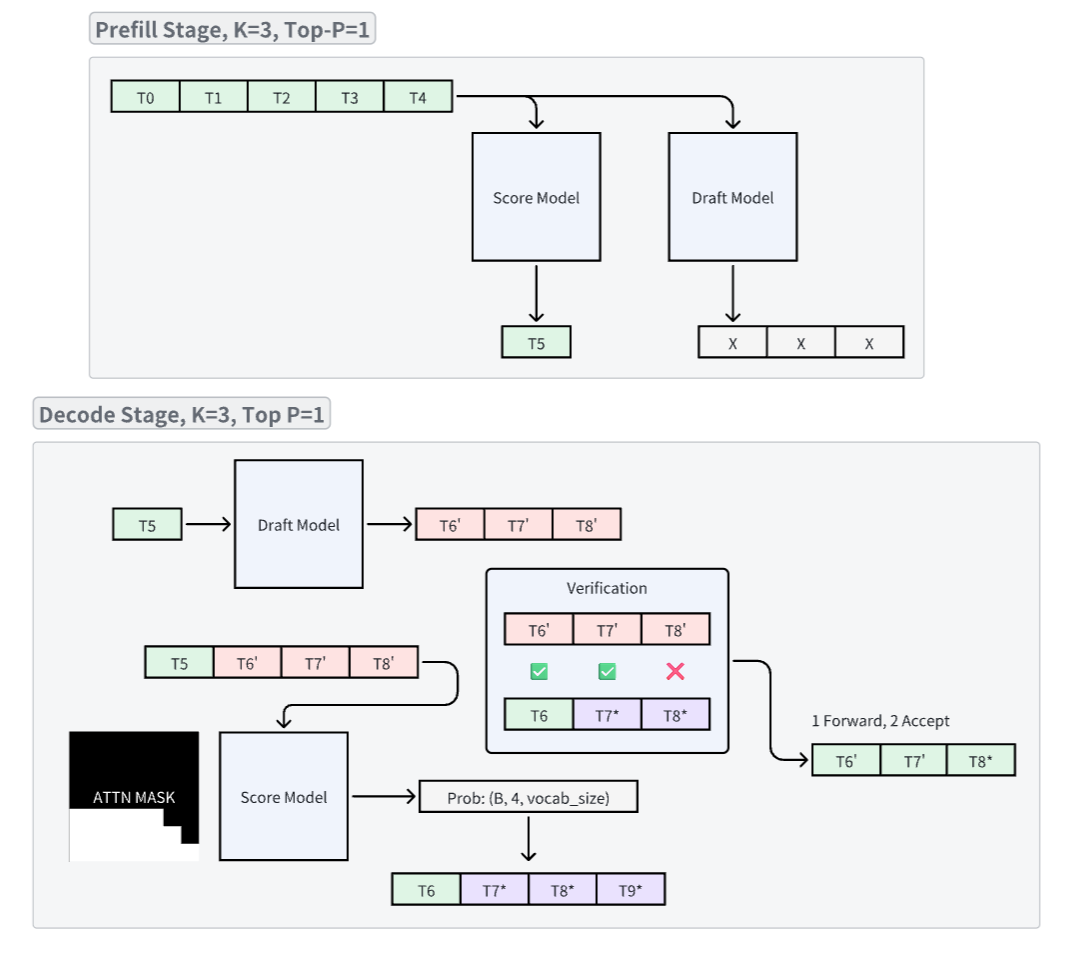

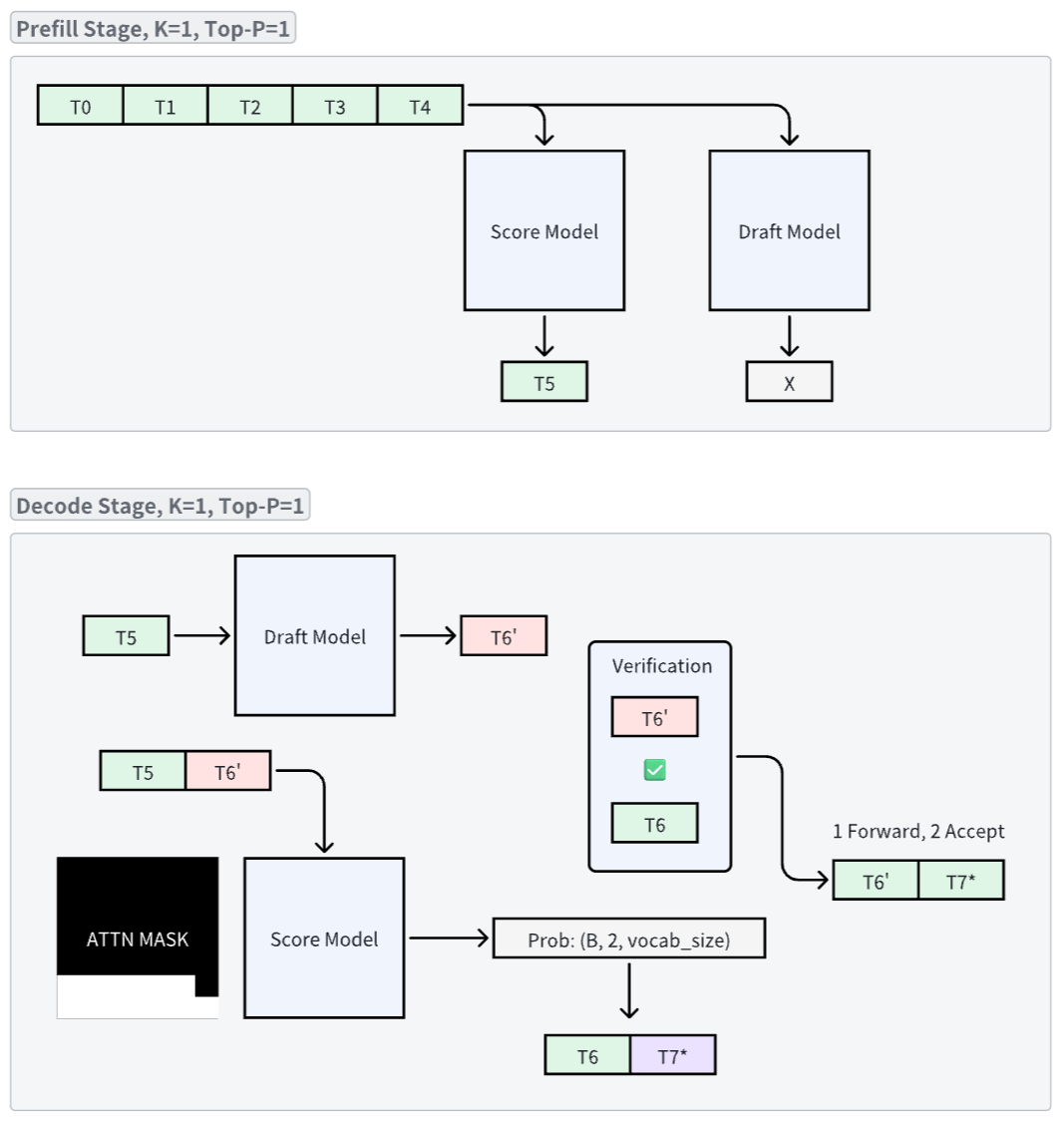

Given a score model S (for example, LLAMA-3-70B) and a draft model D (for example, LLAMA-3-7B), the process of speculative decoding can be described as follows:

| |

2. How Speculative Decoding Works in vLLM

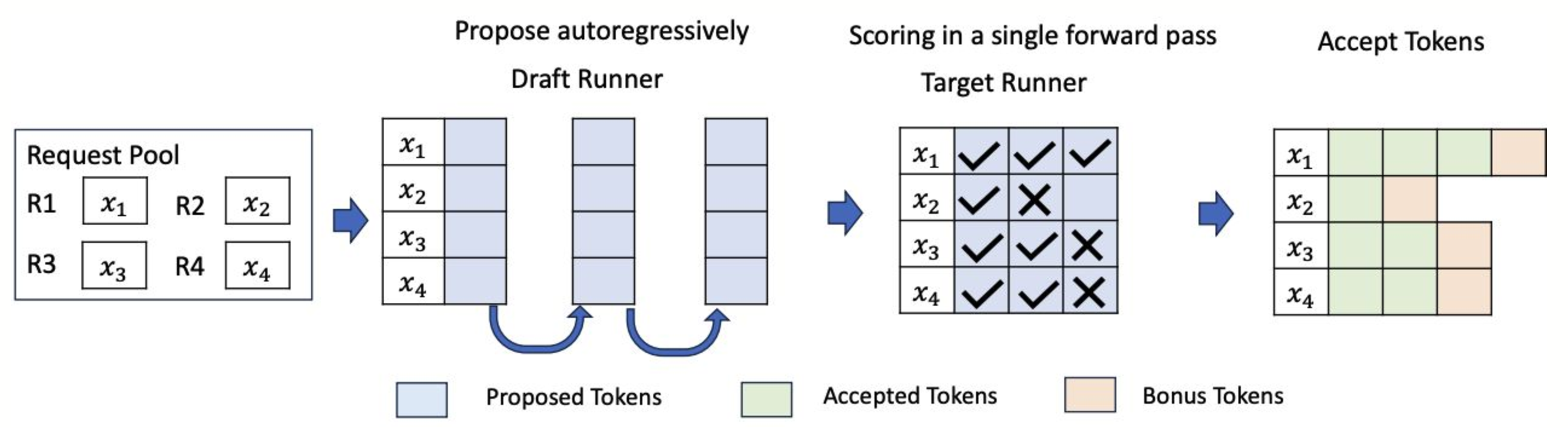

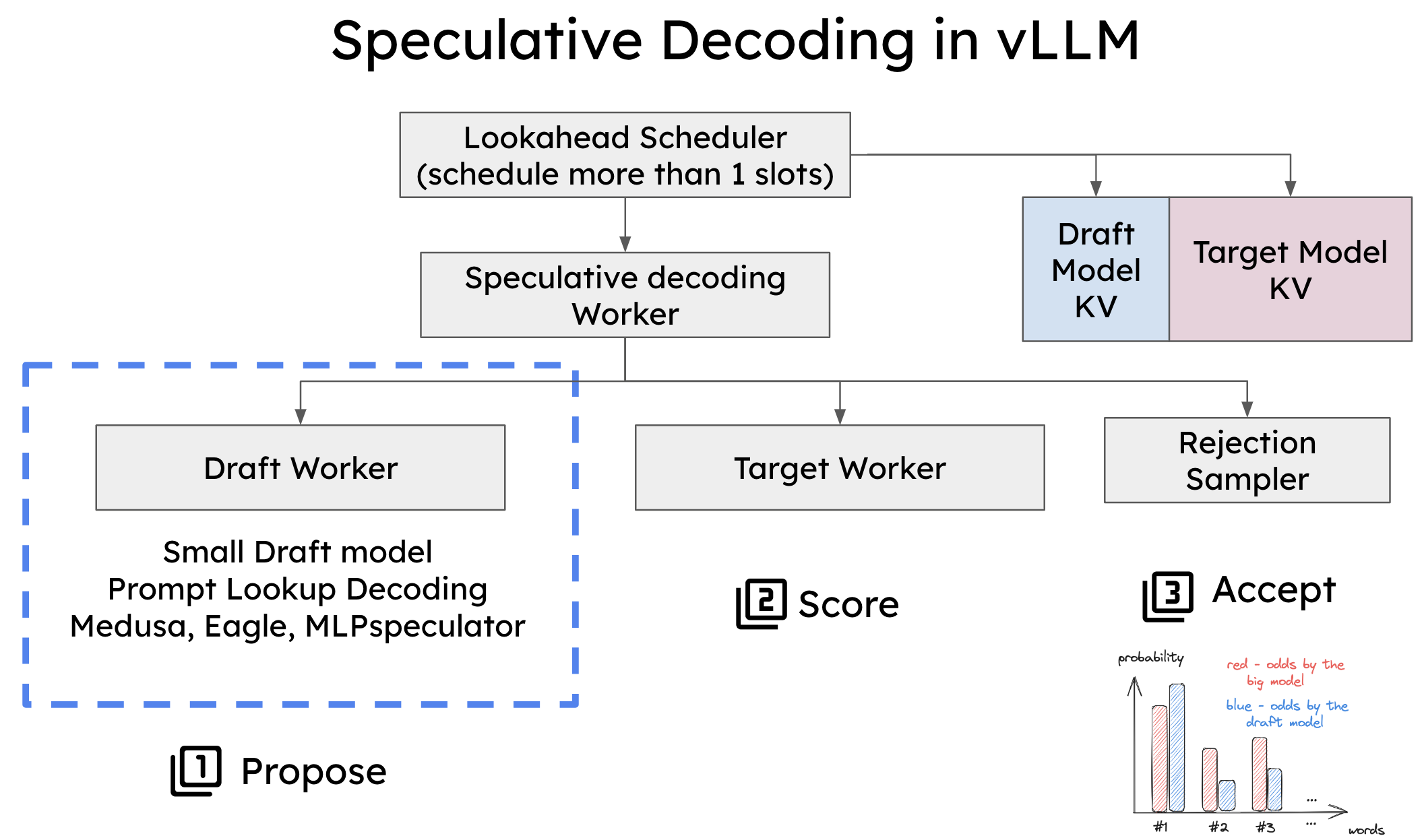

In vLLM, speculative decoding is integrated with the system’s continuous batching architecture, where different requests are processed together in a single batch, enabling higher throughput. vLLM uses two key components to implement this:

- Draft Runner: This runner is responsible for executing the smaller proposer model to propose candidate tokens.

- Target Runner: The target runner verifies the tokens by running the larger scorer model.

vLLM’s system is optimized to handle this process efficiently, allowing speculative decoding to work seamlessly with continuous batching, which increases the overall system performance.

To implement speculative decoding in vLLM, two crucial components had to be modified:

- Scheduler: The scheduler was adjusted to handle multiple token slots within a single forward pass, enabling the simultaneous generation and verification of several tokens.

- Memory Manager: The memory manager now handles the KV cache for both the draft and scorer models, ensuring smooth processing during speculative decoding.

3. Types of Speculative Decoding Supported in vLLM

3.1. Draft Model-Based Speculative Decoding

This is the most commonly used form of speculative decoding, where a smaller model predicts the next tokens, and a larger model verifies them. A common example would be using a Llama 68M model to predict tokens for a Llama 2 70B model. This approach requires careful selection of the draft model to balance accuracy and overhead.

Choosing the correct draft model is essential for maximizing the efficiency of speculative decoding. The draft model needs to be small enough to avoid creating significant overhead but still accurate enough to provide a meaningful performance boost.

However, selecting the right draft model can be challenging. For example, in models like Llama 3, finding a suitable draft model is difficult due to differences in vocabulary size. Speculative decoding requires that the draft and target models share the same vocabulary, and in some cases, this can limit the use of speculative decoding. Therefore, in the following sections, we introduce several draft-model free speculative decoding methods.

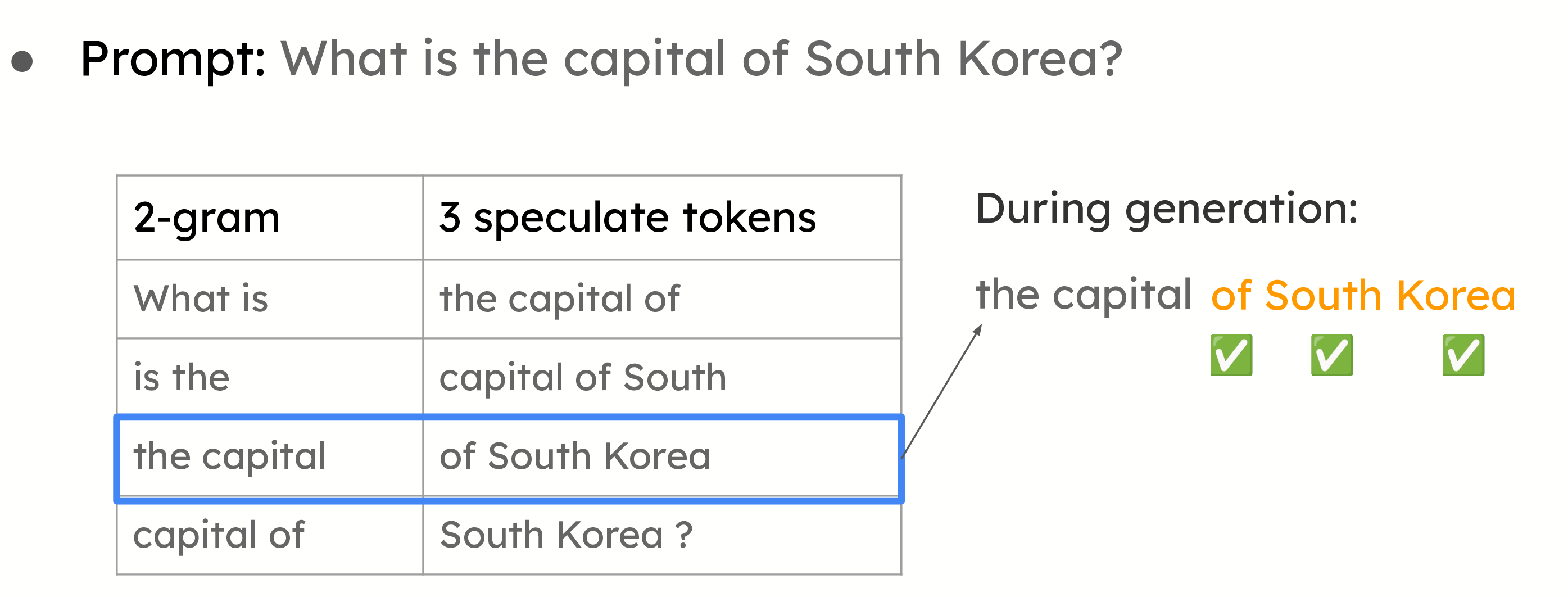

3.2. Prompt Lookup Decoding

Otherwise known as n-gram matching, this approach is effective for use cases like summarization and question-answering, where there is a significant overlap between the prompt and the answer. Instead of using a small model to propose tokens, the system speculates based on the information already available in the prompt. This works particularly well when the large model repeats parts of the prompt in its answers.

4. MEDUSA

4.1. Roadmap

- [vllm][ISSUE] | Can vLLM support medusa head? #1023

- [vllm][ISSUE] | [Discussion] Will vLLM consider using Speculative Sampling to accelerating LLM decoding? #1171

- [vllm][PR] | [Speculative Decoding] Medusa Implementation with Top-1 proposer #4978

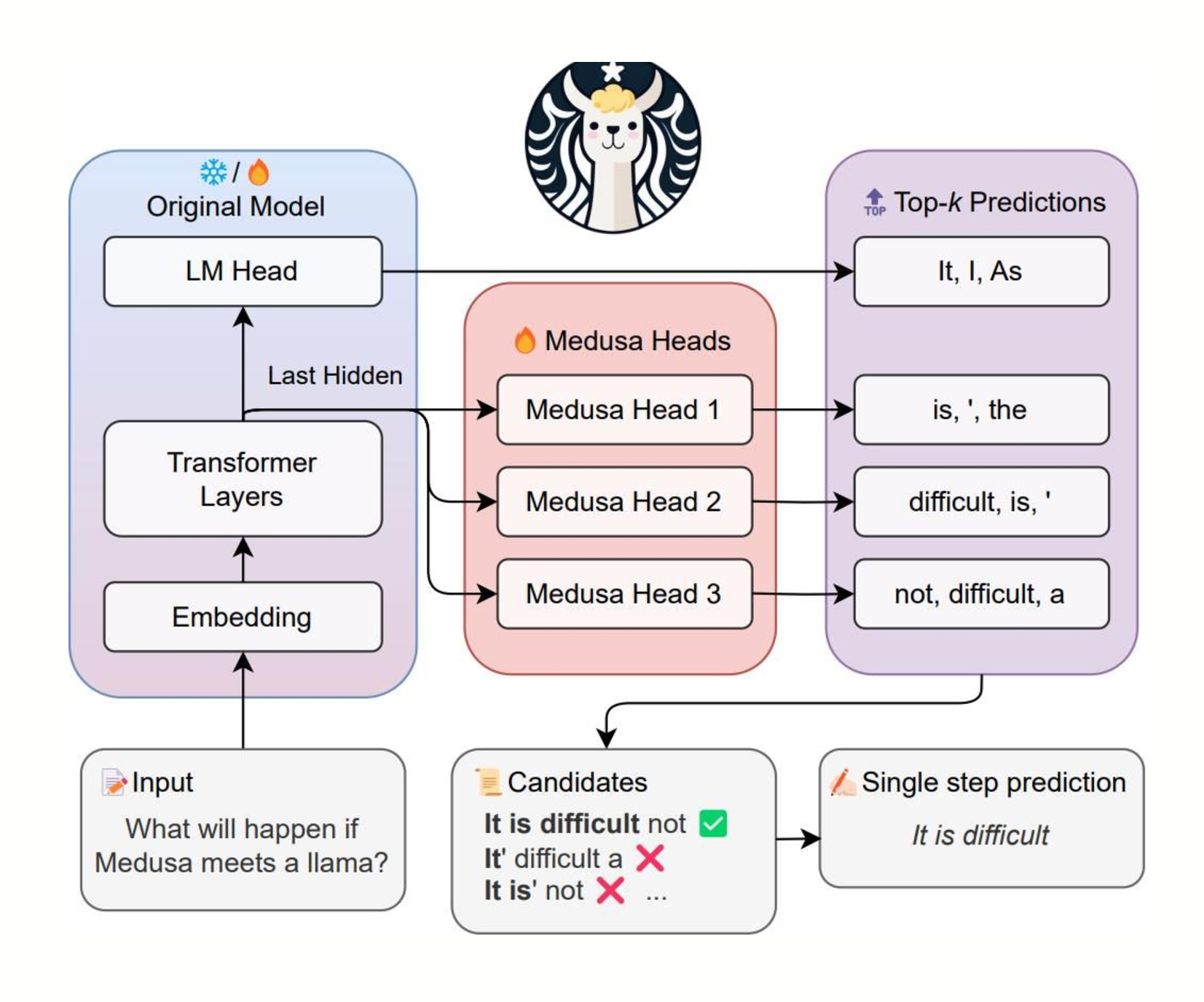

4.1. MEDUSA Heads

MEDUSA heads are additional decoding heads appended to the last hidden states of the original model.

Specifically, given the original model’s last hidden states $h_t$ at position $t$, we add $K$ decoding heads to $h_t$. The $k$-th head is used to predict the token in the $(t + k + 1)$-th position of the next tokens (the original language model head is used to predict the $(t + 1)$-th position).

$$ \begin{aligned} p_{t}^{(k)} & =\mathrm{softmax}\left(W_{2}^{(k)}\cdot\left(\mathrm{SiLU}(W_{1}^{(k)}\cdot h_{t})+h_{t}\right)\right), \\ & \mathrm{where~}W_{2}^{(k)}\in\mathbb{R}^{d\times V},W_{1}^{(k)}\in\mathbb{R}^{d\times d}. \end{aligned} $$Unlike a draft model, MEDUSA heads are trained in conjunction with the original backbone model, which can remain frozen during training (MEDUSA-1) or be trained together (MEDUSA-2).

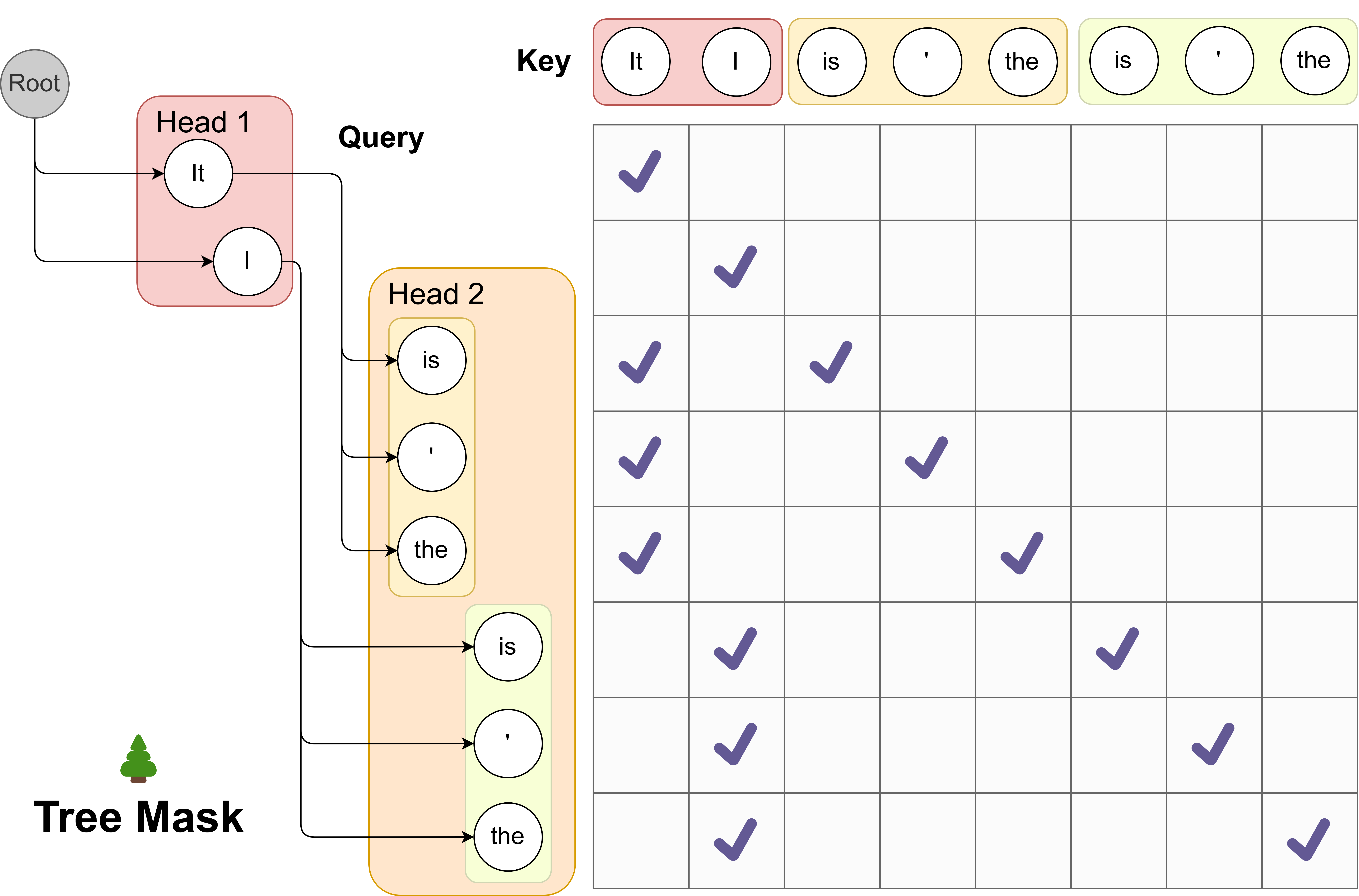

4.2. Tree Attention

The top-2 predictions from the first MEDUSA head and the top-3 from the second result in a total of $2 \times 3 = 6$ candidates. Each of these candidates corresponds to a distinct branch within the tree structure.

To guarantee that each token only accesses its predecessors, an attention mask is devised that exclusively permits attention flow from the current token back to its antecedent tokens.

5. EAGLE

5.1. Roadmap

5.2. Detailed Process

This link is a Feishu drawboard to show the detailed process of speculative decoding with EAGLE in vLLM:

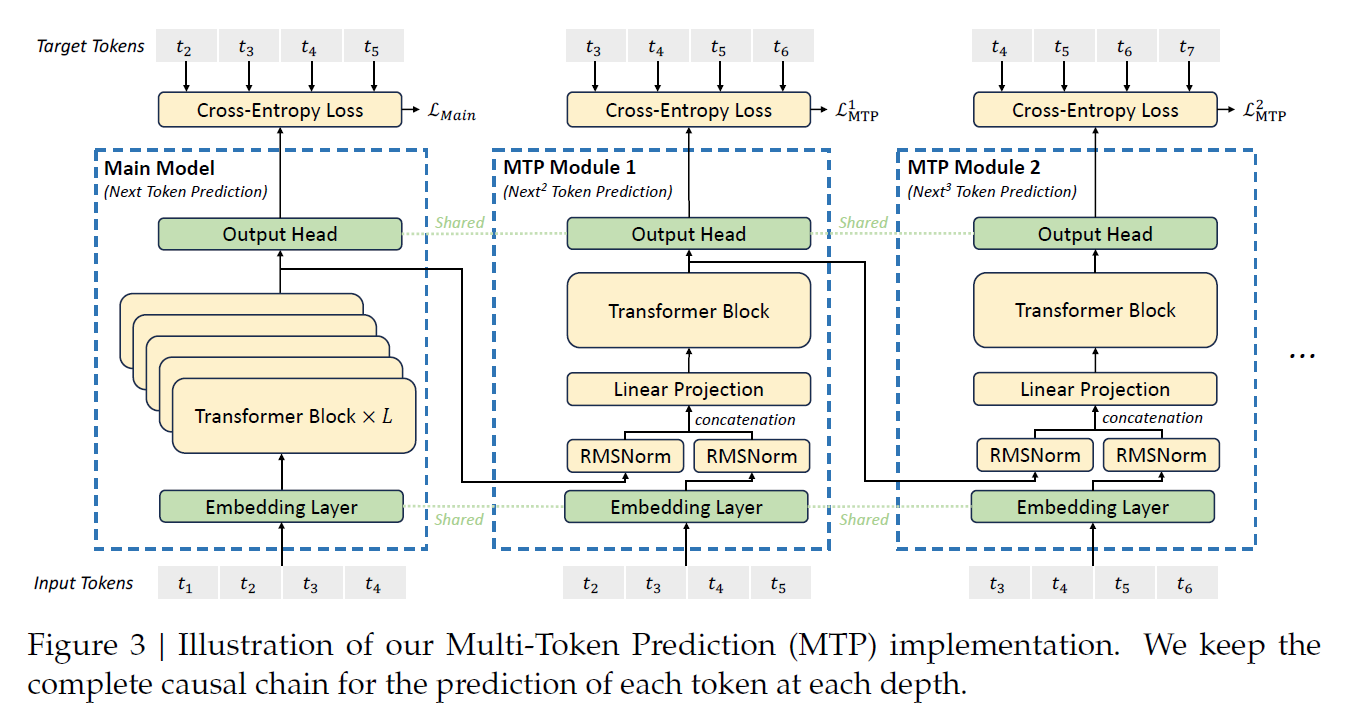

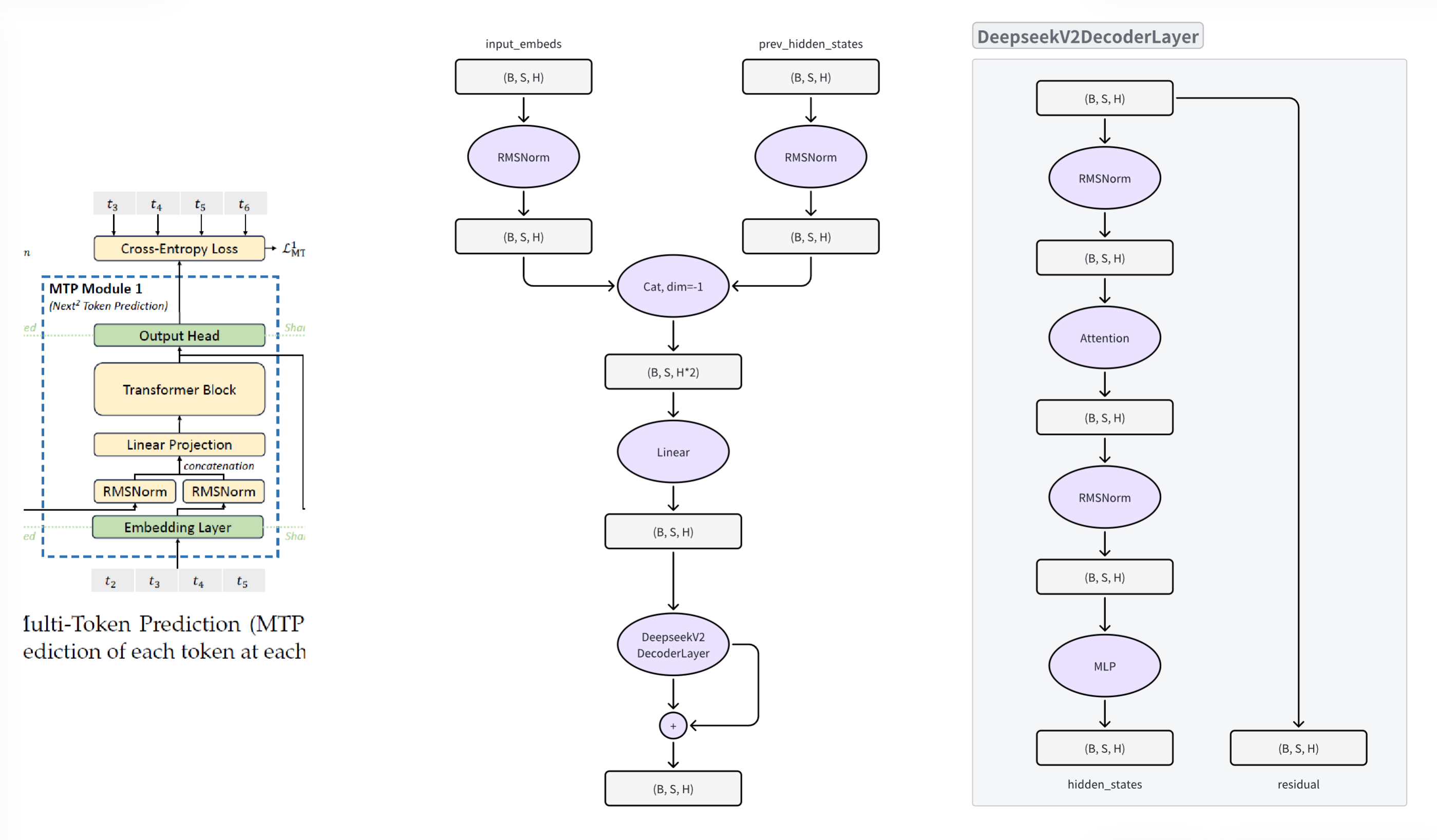

6. DeepseekMTP

7. Discussion

7.1. Performance Insights, Speedups, and Trade-offs

Ref: [vllm] | How Speculative Decoding Boosts vLLM Performance by up to 2.8x

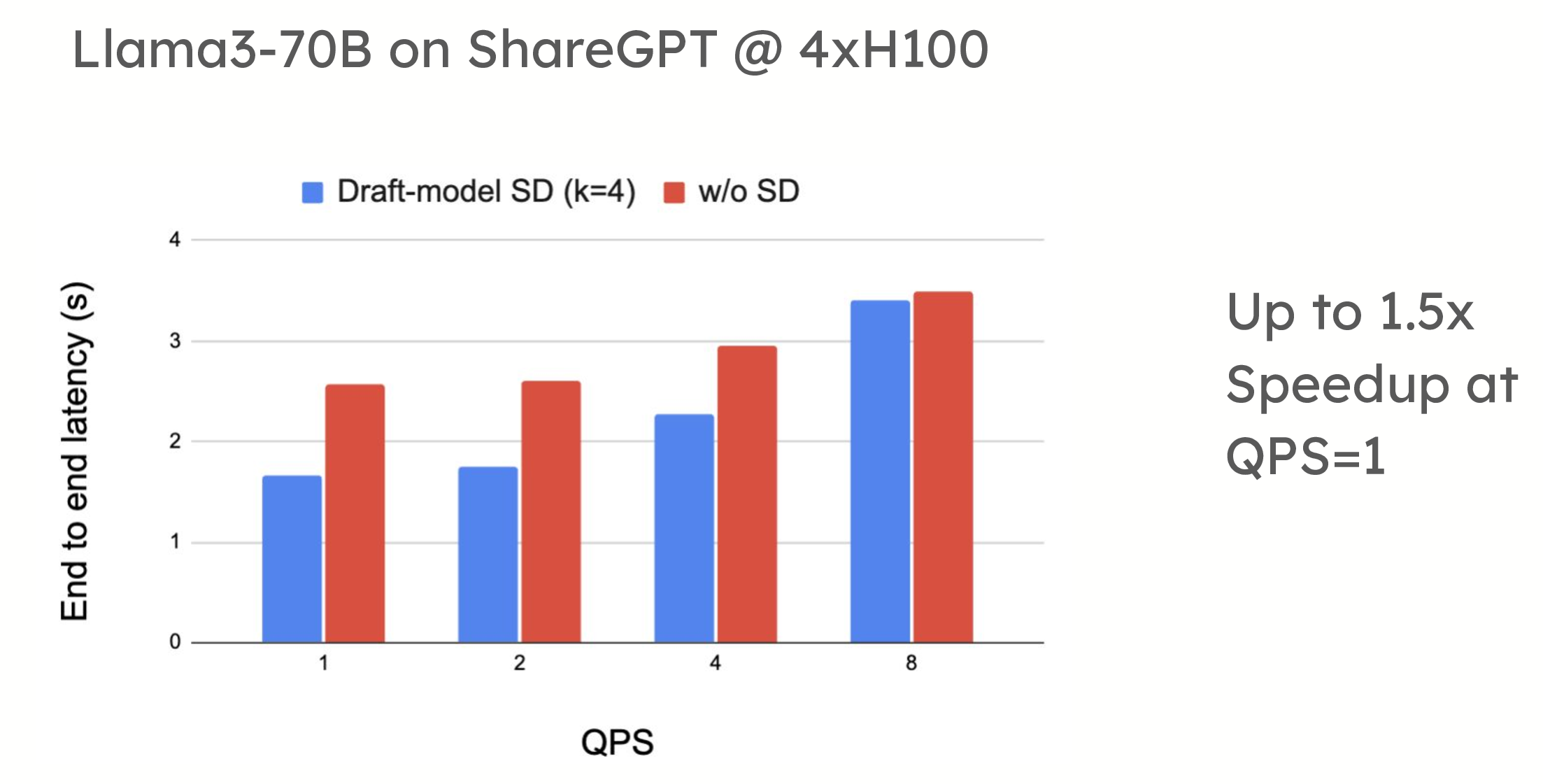

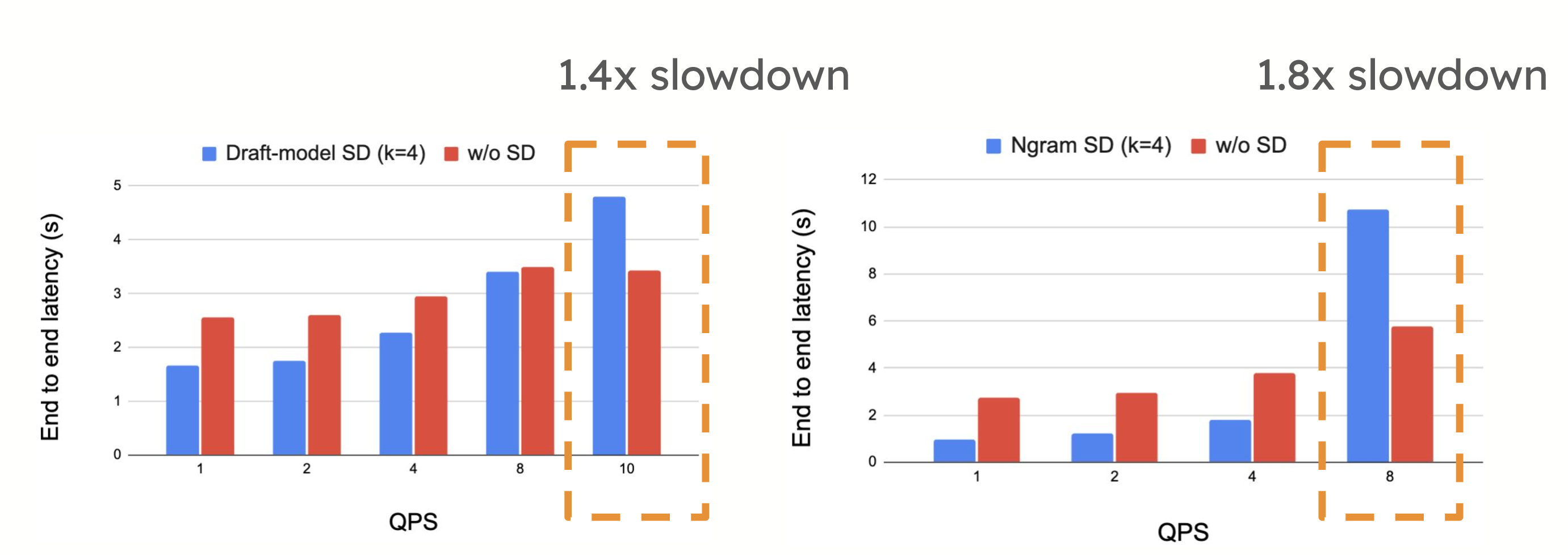

Speculative decoding offers significant performance benefits in low-QPS (queries per second) environments. For example, in testing on the ShareGPT dataset, vLLM demonstrated up to a 1.5x speedup in token generation when using draft model-based speculative decoding. Similarly, prompt lookup decoding has shown speedups of up to 2.8x when applied to summarization datasets, such as CNN/DailyMail.

However, in high-QPS environments, speculative decoding may introduce performance trade-offs. The extra compute required to propose and verify tokens can sometimes slow down the system when it is already compute-bound, as seen when the number of requests per second increases. In such cases, the overhead of speculative decoding can outweigh its benefits, leading to reduced performance.

7.2. Why exactly is batch expansion inefficient?

Ref: Optimizing attention for spec decode can reduce latency / increase throughput

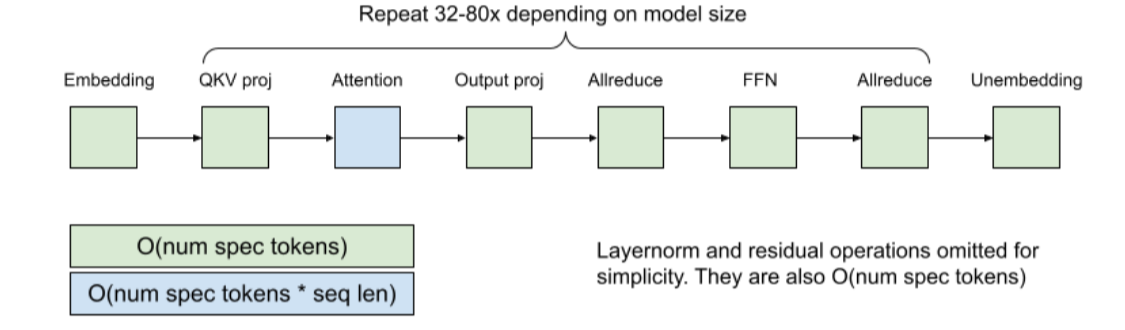

Looking at Llama2 architecture, each component has the following algorithmic complexity wrt speculative tokens and sequence length. The baseline is non-speculative decoding, so factors such as d_model are ignored as they are the same in either case.

Each of these scales linearly with number of speculative tokens, except for attention, which scales by num_spec_tokens * seq_len. This means that for large batch sizes and/or large speculative trees and/or large sequence lengths, attention will be the computational bottleneck.

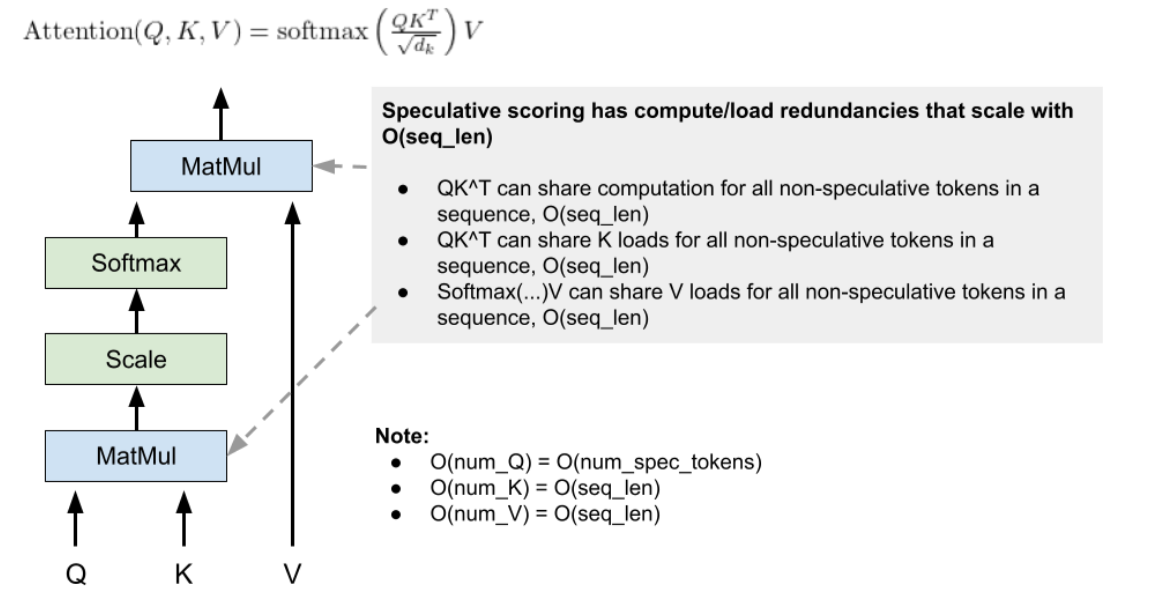

To optimize the attention operation, the key is that components of the attention operation are duplicated when scoring different speculative tokens given the same prefix sequence:

Speaking theoretically, we can optimize attention for speculative scoring by reducing redundant QK^T computations + loads and Softmax(...)V loads:

- Share K loads for common tokens

- Share K*Q compute for common tokens

- Share V loads for common tokens

We should experimentally verify this analysis: one weakness is that Softmax(...)V computation is still O(num_spec_tokens * seq_len).

References

- [vllm] | Speculative Decoding

- [vllm] | How Speculative Decoding Boosts vLLM Performance by up to 2.8x

- [vllm] | How to Use Speculative Decoding in vLLM .

- [vllm][PR] | [Speculative Decoding] Medusa Implementation with Top-1 proposer #4978

- A Hitchhiker's Guide to Speculative Decoding

- [vllm] | What is lookahead scheduling in vLLM?

- Optimizing attention for spec decode can reduce latency / increase throughput

- [vllm][ISSUE] | [RFC]: Automate Speculative Decoding #4565

- [HF] | Faster Assisted Generation with Dynamic Speculation